While impossible to cover the full breadth of the 204-page behemoth itself, for those curious I’ve included a computer science-only highlight summary of my Master’s thesis. I’m shying away from most bioscience terminology and avoiding most of the biological background, as the goal of this article is to overview my project for those outside the field.

Motivations:

Photosynthesis is incredibly important for life on Earth as we know it, as it creates and sustains the oxygen in the air that we breathe. Much of that oxygen is produced not only by plants (though they do play a large role) but by cyanobacteria (photosynthetic bacteria that also formed the foundation for how photosynthesis works in plants and algae) covering the world’s oceans. There are many basic biological questions about these essential creatures that we don’t understand. One involves how these life-giving membranes divide.

Analogy:

Cellular division is fairly well understood, but that’s only part of the puzzle of how such cells divide. If you imagine dividing a lasagna in two for two people to eat, you simply can’t separate the two halves without separating each layer from top to bottom; you have to make a full cross-sectional cut to separate the two pieces. Carrying this analogy forward, cellular division (division of the cellular membranes and the cells themselves) only handles how the top-most and bottom-most layers of the lasagna get cut—the inner layers (here, the thylakoid membranes) must also be split through some unknown mechanism.

Summary:

As a one-sentence summary of the thesis, I propose a short but comprehensive set of possibilities for how this (thylakoid membrane division) might occur, observe the process carefully in two divergent species of cyanobacteria using light microscopy, implement a new image segmentation technique tailored for this setup, and find that the two species appear to use different processes for dividing their thylakoid membranes, one orderly and one disorderly.

These findings suggest different approaches for discovering the mechanistic details of how this division occurs, and I lay the groundwork and a roadmap for future work in this area, including specifying possible genetic targets (though I’ll skip that here).

Figures:

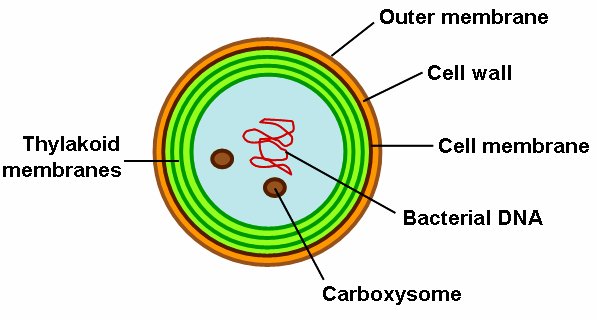

General (simplified) thylakoid membrane morphology, using genus Synechocystis as example. Source: Wikimedia Commons.

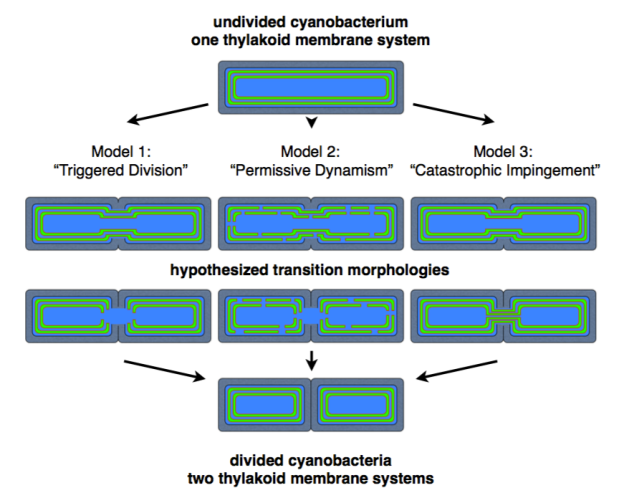

Division models proposed in my thesis (the thylakoid membranes are shown in green with red edges). On the left we have division triggered specifically in advance of the dividing cell membranes; in the middle we have sufficiently frequent division such that a triggering mechanism is not needed; on the right we have no division mechanism short of the thylakoids being forcefully “cut” by the dividing cell membranes.

Burden Placed on Image Analysis:

The relevant details here are those relating to the segmentation algorithm I developed and implemented. A few background observations are also important to note. First, these bacteria are small and grow in clusters with very little distance to separate each individual cell. The combination of these two factors in particular makes proper segmentation of each cell difficult. Edges, that when observed at a large scale are sharp, are instead necessarily blurry due to the diffraction limit of light (the cells are not much larger than the waves we’re using to image them, so resolution is inherently limited by the physics of light). The high magnification required to visualize the cells (and their internal membranes) also means that we won’t be able to afford a high density of photons per pixel in the image, which means that the images will be inherently noisy (grainy) as well. Attempts to mitigate either of these problems (the blur and the noise) by choosing a smaller wavelength or increasing the intensity of light will poison the cyanobacteria with light outside their acceptable range of energies (the photosystems are tuned to specific frequencies within the orange-red part of the visual spectrum, and they can only sustain so much irradiative intensity before being damaged and destroyed). Longer exposures miss out on potentially important second-by-second information about the division process (yes, I did find significant movements that occurred faster than the framerate could capture) and are also not ideal. As such, we unfortunately have to manage these problems in silico (computationally) instead of in vivo (physically). Offloading these physical problems onto the computational side of the project places a high burden on the image analysis system. Typical tools used to segment images of cells (typically much larger, eukaryotic cells) performed poorly, often segmenting clusters of cells and/or mere portions of cells.

Imaging Solutions:

I implemented a dual-channel approach in which two related but independent measures of cell shape were taken: one using phase contrast and one using the inherent fluorescence of the thylakoid membranes distributed throughout the cells. Phase contrast microscopy uses differences in refractive index (specifically the retardation of light waves passing through media of higher refractive index) and the ability of light to self-interfere to bring attention to the borders between objects of different refractive index (in a physical as opposed to non-computational way). It is the standard approach for cell segmentation, but as indicated earlier, due to the difficulties of this particular imaging setup, it performed poorly on its own. Consecutive phase contrast images were also too correlated and mistakes made on one by the general segmentation software were often repeated on the other. Cyanobacteria, due specifically to their light-feeding properties, are also fluorescent under certain wavelengths of light. I imaged each field alternatingly in quick succession using phase contrast and epifluorescence microscopy (epifluorescence specifically measures light emitted by a fluorescent object, as opposed to possible reflections or diffractions around it). These two orthogonal (in the informational though not geometrical sense) measures of cell shape provided much more information for accurately segmenting the cells, so I went about implementing my own image segmentation algorithm capable of simultaneously using information from both channels.

The benefits of using these two imaging techniques go deeper than just providing alternate estimates of the cells’ shapes. Each has its own associated gradient, and these gradients properly anti-align at the cell boundary (thylakoid fluorescence is brightest inside the cell, where the thylakoids actually are, and phase contrast is darkest inside the cell, due to the cell contents’ slowing of light as it passes through leading to interference). The information gathered from each source therefore had the greatest detail (toward the right side of the histogram in photographer’s terms) where the other had the least detail (toward the left side of the histogram); the sources were exceptionally complementary.

Algorithmic Approach:

The use of these complementary imaging methods made it feasible to segment cells, but what about the greater task of segmenting some ten thousand frames of a hundred or more cells each within a reasonable time frame? One typical approach is to use constricting polygons around each cell center with an “energy” function that penalizes large distance between the vertices of the polygon and further penalizes sharp angles between polygon edges in a manner tunable with hyperparameters. Such methods calculate the total energy around the perimeter of the cell many times as a local energy minimum is approached. Such an approach, aside from being computationally expensive when considering a million cell-frames, is weak both to local minima in noisy images and to thin cells pressed side-by-side in clusters, both of which are characteristics of the imaging context we’re considering here. I knew a more appropriate method would be required, and set out to design one from scratch starting from first principles and immediately considering this unique imaging context.

The longer-term goals of the imaging project were to carefully and accurately analyze aspects of cell shape and internal thylakoid membrane shape throughout the division cycle, which would require exceptionally accurate estimations of cell size and (angular) orientation for spatial alignments and averaging across multiple cells (for seeing through the fog of noise in particular). I therefore decided to highly prioritize accuracy in segmented cell shape at the cost of “missing” some cell-frames (i.e. biasing towards type II-like errors—dropping a correct cell outline—for the sake of minimizing type I-like errors—wrongly accepting an incorrect outline), which could be compensated for by just averaging over even more data. I’d need to develop a fast algorithm, preferably analyzing each cell-frame’s cell outline just once, in order to capture enough data for the high degree of averaging I’d be doing.

The Algorithm:

I decided on a snake-like algorithm that would start from the most recognizably cell-edge-like pixel around a cell center and progress one circumference along the cell edge pixel-by-pixel. The direction of travel by the edge finder was guided orthogonally by both the thylakoid epifluorescence and phase contrast image gradients (following directions in which the direction’s cross product with each gradient had high magnitude and opposite sign) and also by the previous directions traveled (allowing movements in directions for which the dot product of current direction and average previous direction are non-negative). In this manner, the algorithm would analyze only a local window of pixels around each cell’s edge only once, and it would terminate once it formed a closed loop of reasonable perimeter and eccentricity with centroid sufficiently similar to the last capture (as quality control to maintain accurate cell outlines). Each overall frame was first thresholded (with binary openings to reduce noise) in each image channel to best find cell centers and define reasonable realms in which the snakes could roam (for instance, not allowing them to stray off too far from the cell clusters). I tuned hyperparameters for each species of cyanobacteria (one rod-shaped, one approximately spherical) to best apply strict quality control to acceptable cell outlines that were being tracked and analyzed.

Each cell was assigned a uniquely identifying number and tracked over its lifetime from first detection to division into two separately segmentable cells. The cells were physically held in place by their being sandwiched between the imaging glass (slide) and a nutrient-containing agarose hydrogel to prevent drift, and the algorithm would remember each cells’ centroid for continued tracking and update said centroid with subsequent frame in case of drift (which did occur—in sudden, earthquake-like fashion, though maximal drift only reached 1-2 pixels per frame—as the agarose hydrogel slowly dried over the 24+ hours of imaging).

Data (such as calculated centroid, angular orientation, eccentricity, length, width, etc.) on each cell in each frame was saved in a large array, and a local snapshot of the cell centered at the cell’s centroid (in both phase contrast and epifluorescence) was saved to a directory particular to each cell. After the full segmentation run was completed, each cell’s course of division could be viewed in video format by stitching together that cell’s snapshot (in either phase contrast or epifluorescence) in each frame, along with an optional temporal Gaussian blur (applied over a few consecutive frames with a stride of 1) for image clarity. Furthermore, a composite video was created for each image type (phase contrast or epifluorescence) and species showing an average time course of division for that species by spatially rotating each cell’s images by their orientation at that time and temporally aligning each cell’s snapshots in reference to the frame of its completion of division.

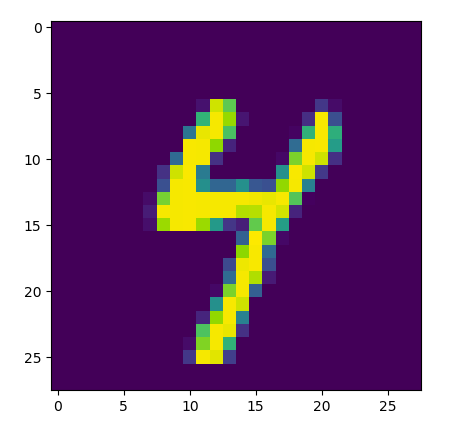

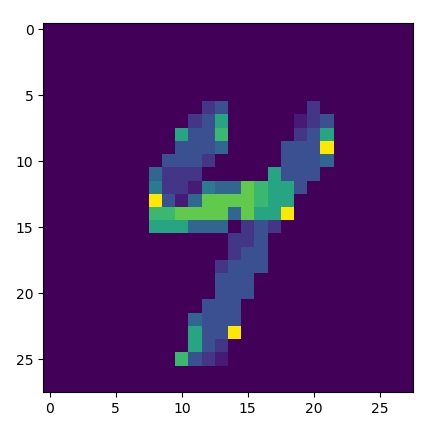

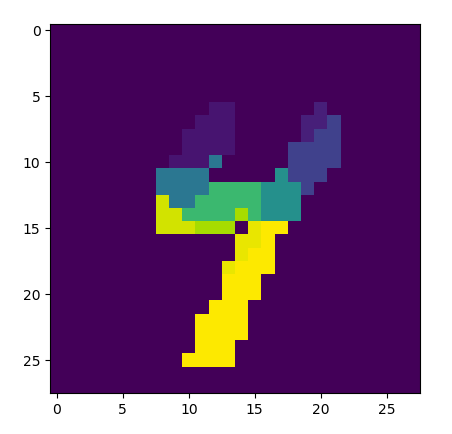

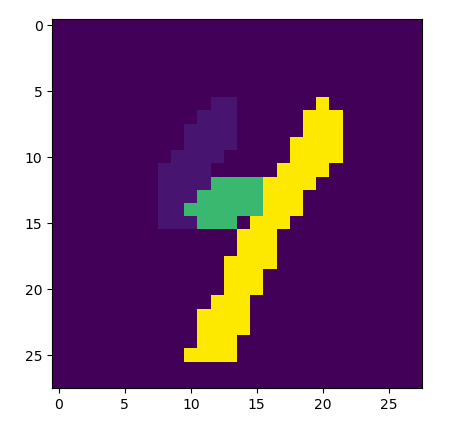

A Few Example Images:

Findings:

Aside from the aforementioned finding that one species’ thylakoid division process was orderly (appearing as a very clear, non-dynamic structure that would split along the division plane partway through cell division) and the other was disorderly (appearing as a highly dynamic set of constantly forming and breaking thylakoid connections between roaming thylakoid regions that seemed to make way for the dividing cell membranes on their own just through constantly rearranging), a few other results from the field were recapitulated. Cell division was found to be loosely synchronized in one species as previously reported and completely asynchronous in the other (also as previously reported).

Example of High-Throughput Analysis:

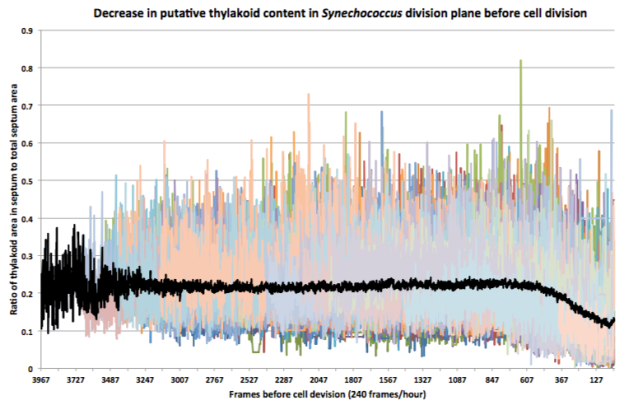

Due to the significant amount of noise, analysis was only possible using high-throughput and highly averaged approaches (necessitating the image segmentation algorithm). Over one hundred distinct cells’ data (colored traces in the background) were averaged over their lifetimes (black trace in the foreground) to generate insights. Here we see potential evidence of thylakoid membrane division in advance of the completion of cell division in Synechococcus, supporting Model 1 for this species.

Discussion:

Overall, this process gave me a solid experience-based foundation in methods of image segmentation and image analysis generally, which I carry with me in my current work as a data scientist. According to a recent survey-based report by Kaggle, image data is the third-most common data type used in the field of data science, after relational data and textual data, and comprises an estimated one sixth of data science work (including video data). Based on personal experience, it also seems to be relatively ignored among data scientists in training, who generally stick to the less esoteric realm of relational and textual data. It’s given me a greater perspective on the overall process of finding kernels of truth within seas of information as well as a few translatable skills that I can cross-apply to data analysis tasks in general.